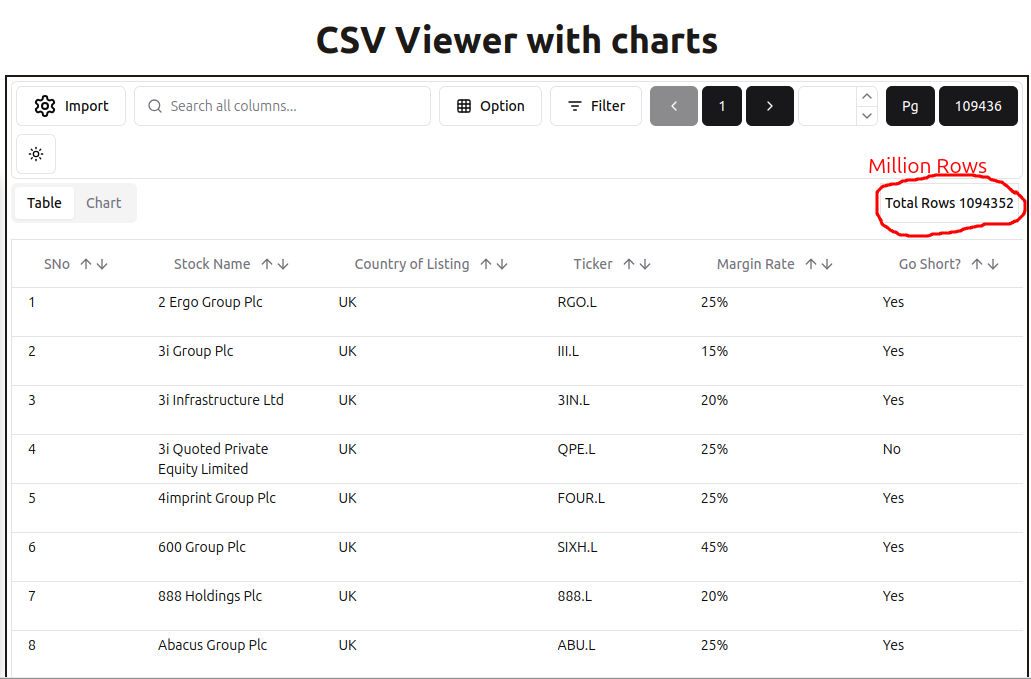

TLDR; Story of challenges faced while displaying large csv file with million+ rows in CSV Viewer

CSV Viewer crash

I got a mail from a user stating that opening a specific csv file crashes the CSV Viewer app. I dutifully downloaded the file and it crashed it for me as well. I checked the file size; it was slightly bigger than 50MB, "Well of-course it crashed; I specifically mentioned how to use large files with preview mode". Looks like the user did not bothered to read the application notes. I was about to reply with a link about preview mode, but i got curious: why did it crashed? My machine has 4GB; there are no other tabs open. It should be able to open 50MB file, shouldn't it?

Internals of CSV viewer

There are two likely candidates that could be causing the crash: parsing csv or rendering of data. Generally, rendering is the heavier operation. Most of the csv viewers crash on DOM rendering with large data, but there are well-known techniques to mitigate this. Almost all rely on the fact that, at a time, only limited number of rows would be visible to the user. Some of them are

- Pagination (plain) : Breaks data into pages, showing a fixed number of rows per page. Users navigate through numbered pages.

- Pagination (Infinite scrolling) : Loads more data as the user scrolls, giving the illusion of continuous data without overwhelming the DOM.

- Virtualization : Renders only visible rows, swapping them out as the user scrolls. Efficient for large datasets.

- Canvas based rendering : Uses HTML canvas for drawing cells, bypassing DOM limitations for better performance with large datasets.

I should also mention that there are some seriously impressive libraries like Fast Grid that continue to push the limits of DOM. However at million rows DOM based approach is simply not feasible.

Tracking the bug

I am already using pagination so rendering was ruled out. I opened the task-manager in Firefox and again tested the csv, just to see the memory going up from 1GB to 2GB to crash. This was happening after the parsing (as confirmed from the logs) and before rendering, so the usual suspect - parsing and rendering - were not causing it. It turns out the headless library is blowing up the memory, possibly because it is doing some internal processing for the future when sorting, searching, filtering, etc., would be needed.

React table lib's black-hole

This should be easy. I will just replace the current lib with another headless lib. I might have to do some work based on the features supported, but that should be okay, I thought. This react-data-grid looks nice, it does force you to use its own styling but i will find a way out of it, and while it also takes time it does render the table, i guess we are done, this was simple but let me just try sorting once aaand CRASH! hmmm OK let me try other lib's surely someone might have solved this problem how about react data table component ? it allows lot of customization wrt to styling, let's test this aaand CRASH! its not even rendering! Let's ask AI to weigh in. It's recommending ag-grid, but I decided against it and used Tanstack Table in the first place as styling is not easy with it. But let's see if a commercially used lib is up to the task. Let's open the file again with this and WOW, it rendered it properly. Let's try sorting. It's taking time, but it did sort! Let's sort again to see if it will always take time or if it does something intelligent. And it's again taking the same time. Hmmmm. I will not bore you with more commentary on other libs that I tried. Needless to say, none of them worked.

Trying different approach switching to db

OK lets try something else. How about we use IndexedDB? It is supported out of the box by all major browsers; it should be easy to sort/search/filter with it. Ok, what is happening? let me check on console it seems there is some error, how are others doing it? Let's open this example from the internet, and it's giving the same error? that's wired let me try some ready-made app aaaand its giving same error, what's going on? Let's close the browser and try again. Now everything is working fine. What was going on earlier? Let's come back to it later, now that it is working let's try again and it's giving the same error. Other apps have also started giving the same error! So if one of the app is using IndexedDB improperly, it would lead to IndexedDB not working for all the apps. WTF! and user would have no clue. I can't take this risk, i don't want my app to stop working if some other app behaves improperly. IndexedDB is out.

Let me try sqlite as well one last time, i won't use IndexedDB part just use it in memory, i don't have high hopes but wait it works! and sorting is taking time but it is working too! But my app has become noticeably slower for the first time rendering. Culprit is 700kb wasm file. Unfortunately this project is meant for casual users who just want to open csv file quickly not for power users who want to perform sql queries on it so this approach has to go as well.

Going back to basics

How about I try basic JS as well? I have already tried so many things. Let's open it with this new naive js implementation aand it works! How about sorting? It takes time, but it's working, so basic array sort is fast enough to sort. But I am not keeping the already sorted array, which can make things faster. Now we can certainly do better than naive js and since sqlite performed as well we just need to choose a better data-structure(so leet code interviews were not useless after all!), this should be solved problem i will easily get quality lib's for this in js ecosystem. This NeDB looks perfect lets check this out, oh no its unmaintained. What are other options? there is tinybase which has its own custom query lang that i don't need. There is WatermelonDB that is based on SQLite, which I have already rejected. And why do all these recent libs want to add reactivity to it? All I need is a simple in-memory DB or some lib that can do indexing and search! This Lokijs looks promising but last commit was two years ago is it still maintained? after fussing around a bit in github issues i found maintained fork of it called Sylviejs. I did quick test of sorting, searching etc. and it internally creates indexes on first use so subsequent operations are fast. I am going with this solution.

All roads lead to virtualization

Although I detest continuous tables where there's no way to easily track where you were (unlike paging where it's easy to go back and forth), I did implement it with Tanstack React-Virtual. It was pretty straight forward, i just told virtualization lib how many rows i have and size of row elements and my virtualized table was ready NOT!! Well it was kind of ready except that when i scrolled table header was no longer sticking to its place and this was specific to virtualized code. Same code worked fine with dom based rendering. After pulling some hairs i did managed to make it sticky only to lose alignment of data with header. I had to precalculate header widths and set them to individual column elements of row. Finally its working. I opened few files and scrolled to bottom and it was snappy. Cool now its time to open our million row csv and it WORKED. Lets's scroll a bit and wait, what happened after 500k or so elements nothing is visible? Logic seems correct to me, its not a performance issue cpu is idle and there is plenty of memory. After googling a bit i came to know that i have apparently hit the browsers maximum css pixel limit! I didn't know something like this existed and a mere mortal like me can hit it with my sideproject. But why i am hitting this limit after i have virtualized eveything? There are hardly few elements that are rendered but i do have to set the height of table with large pixel value so that scrolling could work. Lets check this other lib react-window and this suffers from same problem. I guess i have to forego this pixel based approach or can i cheat a little? and my friends cheating works in this world. Browser limit still exists but i have done some sorcery to push it to the limit by lying to virtualizer so that it remains within the limit. Finally million rows are working in continuous mode as well not that i planned for it just got distracted. I am also bald now with all this hair pulling!

Everything else like sorting/filtering is still done in LokiJS except rendering is now virtualized. Also, it is optional, so one can switch back from paging to continuous to paging according to their taste.

Happy ending or NOT!

Ok, now I will be able to view CSV files with million+ rows that you can't open in billion-dollar companies' software (Microsoft Excel wink, wink). I also checked with excel experts on reddit to be doubly sure and got some nice downvotes but i do get to brag that CSV Viewer supports more rows than excel. I opened the CSV and clicked on a button aaand it CRASHED! No, it's not crashing in tables. Apparently, I support charts too, which I completely forgot about in my exploration. And since I can't exploit the fact that at a time only a limited number of rows would be visible in tables i am doomed. Virtualization would not help either since you want to view entire chart at once.

How are other bazillion chart makers solving this? Let's try a few. Ok, apparently they are not solving it. Only thing i can think of is reducing the data that needs to be displayed but how do i maintain the fidelity in that case? what are data experts using? Ok, so it seems to be a common problem, and the way to solve it is sampling. So I am on the right track, but what would be the best compromise? Ok, so there is something called "LTTB" sampling that preserves the shape of the chart, and it works. So I ended up using sampling by default with an option to disable it if the user wants.

Conclusion

Don't rush in abandoning your favorite table library they are fine for few thousand rows and you should stick to it if you don't have to support large number of rows. Either go with pagination for tables or use virtualization if you have large number of rows and want to support continuous scroll. Use canvas for charting large number of data points and use LTTB sampling to get overview. I have made a deliberate choice to read entire data at once in memory, this is not optimal choice but it simplifies the architecture. If you forego this choice you can further optimize the app for billion+ rows, lets wait for user to report it and that would be the topic of another blog post.