TLDR: tips for code reviewers to avoid constant feedback cycles

While reviewing PR metrics, I came across a PR that was taking an unusually long time. On closer inspection, I realized that it's because the review was not being done properly, resulting in an endless loop of code review. Every change made in response to feedback led to new comments, prolonging the review process indefinitely. It also made me realize that some of the things that I take for granted regarding code reviews are not as obvious. So, I decided to explicitly provide guidelines to avoid this happening again.

Converging code reviews

Here are the tips to have converging code review

Give all comments in a single Go: When providing feedback on a pull request, resist the urge to send comments as soon as you spot an issue. Review the entire change set thoroughly and provide comprehensive feedback in one go, minimizing unnecessary back-and-forth.

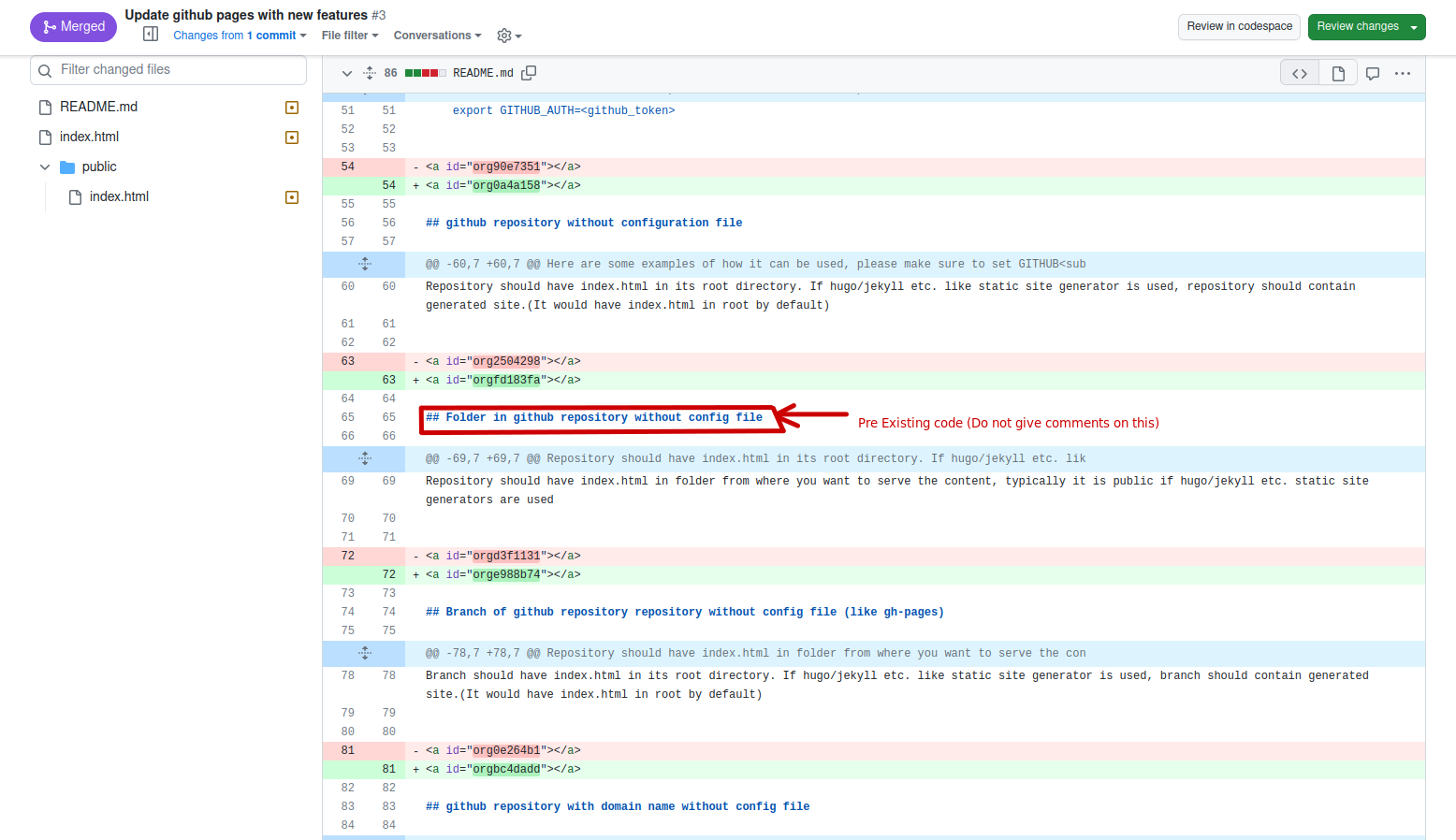

Use different pull request for pre-existing issues: If your feedback relates to issues in the existing codebase not directly tied to the proposed changes, address them in a separate pull request. Keep the review process focused on specific changes.

Converging code review: If you did not provide a comment on some part of the changeset, consider it acceptable, and refrain from providing new comments on the same part that was reviewed earlier. This ensures that the review converges at some point as the scope of change becomes narrower. In subsequent iterations, reviewers are only allowed to comment on parts of the changeset that they have already provided comments on, in case previous comments are not fully addressed. This ensures that the code review is converging, promoting a more focused and efficient review process.

Utilize Automated Tools for Code Style and Commit Messages: Ideally, comments related to code convention or commit message convention should not be necessary. These aspects should be checked and corrected via automated tools and made a prerequisite before creating a PR. Let these tools handle consistency, freeing up valuable human review time for more substantive concerns.

Conclusion

By adhering to the outlined guidelines and leveraging automated tools, teams can ensure that code reviews converge effectively, optimizing the use of valuable human resources.