TLDR; Describes how author used chatgpt to create workaround for downloading data from cloudflare D1 beta that he would have long given up on if not for chatGPT

You can directly skip to the solution update part if you don't want to read the background story

Background story: Problem

Cloudflare has a new database product called D1 that is based on sqlite. In its alpha version it created automated backups that can be downloaded locally for debugging. Unfortunately when they moved to beta they removed this functionality. You can still restore db to previous version but you can no longer download it locally for development and debugging. This broke my existing workflow and after asking on twitter and searching for solution in google it became clear to me that i would have to find the solution myself!

Background story: Developing Solution

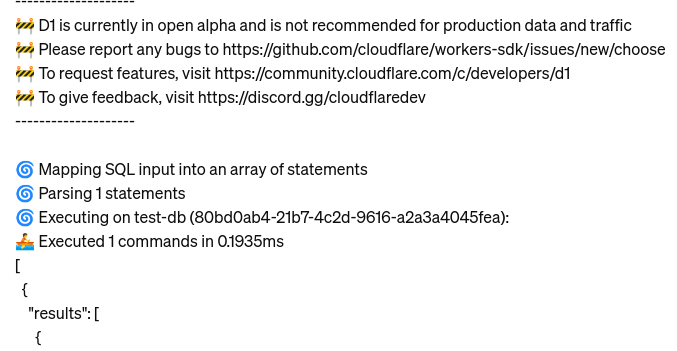

I knew that Wrangler (CF CLI) allows executing queries on remote DB, so I thought I can just dump the data and load it into SQLite. But Wrangler's output contained cli data, query data, and query metadata in JSON, so converting it into something sane that could be loaded into SQLite was going to become a mini-project in itself, I was dreading the prospect of undertaking this additional task that CF had handed me.

I wasn't too hopeful if ChatGPT would be able to do it, but I thought of giving it a try. So, I asked ChatGPT to write a script for me in TypeScript that would extract the data into CSV. To my surprise, ChatGPT extracted the data and gave me a script that would turn it into CSV. While this wasn't exactly what I had in mind, I was still pleased that I wouldn't have to start from scratch in writing the script. However, it still was not extracting data from the CF format as i hoped. After giving a few more clues, ChatGPT was able to write a script that seemed to work.

I completely forgot that I had to compile TypeScript to get it to work on Node, and here is where my laziness kicked in. Given that ChatGPT had already given me a decent script, I thought, instead of wasting my time on installing ts-node, why don't I ask it to convert the script into Deno that has in built ts support? And so, I did, and ChatGPT did its magic and gave me a new script in Deno along with instructions to run it. Had it not been for ChatGPT, there is no way I would have spent time on converting the existing script to Deno.

I tried it, and it didn't work as expected, so I complained to my newfound colleague, Mr. ChatGPT, and it gave me a new script and asked me to report back. I was already getting a weird feeling of becoming a slave of AI where it was instructing me what to do instead of the other way around. Anyway, the script had a bug, and after asking ChatGPT to change the logic, I got a working script.

I tried the generated CSV, but my SQLite tool was not identifying one of the DB fields correctly, so I got another bright idea to turn it into TSV. So, I asked ChatGPT one more time to give me output in TSV instead of CSV. The TSV version worked with the SQLite tool, but I still found loading TSV into SQLite too much work. So, I thought to myself, why do I need to use the SQLite tool to load TSV? I can ask ChatGPT to directly convert it into SQLite DB instead, and ChatGPT obliged.

Solution

Here is the procedure for downloading data from CF and using the script to get sqlite db

Getting Data from Cloudflare D1:

To retrieve data from Cloudflare D1, you can use the wrangler d1 execute command, which allows you to execute SQL queries against your D1 databases. For example, the following command retrieves data from a D1 database named 'test-db' and saves the output to a JSON file named 'output.json':

wrangler d1 execute test-db --command="SELECT * FROM <table>" > output.jsonDescription of script:

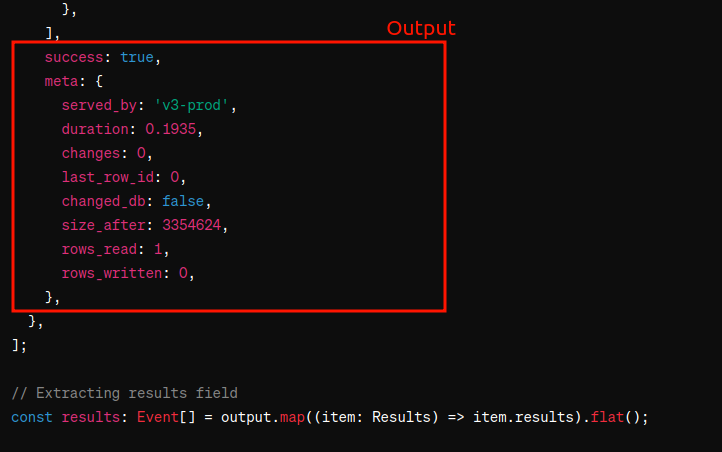

Here is the sample of final script, you will have to modify it according to the table in your database. This script extracts data from Cloudflare D1 and imports it into a SQLite database. It's written in TypeScript, leverages the Deno runtime environment and performs the following tasks:

- Reads the content of the output.json file, which contains data retrieved from Cloudflare D1.

- Parses the JSON data and extracts the necessary fields representing events or records stored in Cloudflare D1.

- Connects to a SQLite database and creates a table named 'table' to store the extracted data.

- Inserts the extracted data directly into the SQLite database table.

// To create input run following

// wrangler d1 execute test-db --command="select * from <table>" > output.json

// To run script execute following this assumes script file name is extractAndConvert.ts

// ~/.deno/bin/deno run --allow-read --allow-write extractAndConvert.ts

import { DB } from "https://deno.land/x/sqlite/mod.ts";

// Use your own table here

interface Table {

Id: string;

Name: string;

}

interface Results {

results: Table[];

success: boolean;

meta: {

served_by: string;

duration: number;

changes: number;

last_row_id: number;

changed_db: boolean;

size_after: number;

rows_read: number;

rows_written: number;

};

}

// Read file content and remove unwanted lines

const fileContent = await Deno.readTextFile('output.json');

let lines = fileContent.split('\n');

// Find the index of the line containing '🚣' and slice the array accordingly

const index = lines.findIndex(line => line.includes('🚣'));

if (index !== -1) {

lines = lines.slice(index + 1);

}

// Join lines back into a single string and parse as JSON

const output: Results[] = JSON.parse(lines.join('\n'));

// Extracting results field

const results: Table[] = output.map((item: Results) => item.results).flat();

// Connect to SQLite database

const db = new DB("output.db");

// Create table

const createTableQuery = `CREATE TABLE IF NOT EXISTS table (Id TEXT, Name TEXT)`;

await db.execute(createTableQuery);

// Insert data into SQLite database

for (const event of results) {

const insertQuery = `INSERT INTO table VALUES ( ?, ?)`;

await db.query(insertQuery, [table.Id, table.Name]);

}

// Close database connection

db.close();

console.log('Data imported into SQLite database successfully.');With this script, developers can easily extract data from Cloudflare D1 and import it into a SQLite database for local inspection, analysis, or further processing. While this solution serves as a temporary workaround for the limitations of Cloudflare D1 in its beta phase, it provides developers with the flexibility to work with their data locally.

Update

cloudflare D1 is GA now and there is export command in wrangler to export the data so you don't need this script. Export command is still buggy and dates are sometimes not exported properly also your db will be unable to process requests while downloading the data

Conclusion:

Chatgpt is amazing tool and making developers productive however it is also making us lazy.